Vation Community Insights: State of GenAI Governance

The rush for enterprises to adopt AI is in full swing. Across industries, organizations are using generative AI to boost creativity, enhance efficiency, and expand human capabilities. The natural response for C-suite leaders is to control this powerful new technology with strict, top-down policies. But what if this traditional approach is not only ineffective but actually counterproductive?

Vation Ventures’ Technology Executive Outlook Report found that the top priority for AI initiatives this year is Governance, Risk, and Compliance (GRC), with 42.24% of executives citing it as a key concern. This highlights the critical tension organizations face as they navigate the rapid adoption of artificial intelligence.

The most effective strategies for managing AI are often unexpected, challenging the common belief that governance is solely about restriction and control. The reality of how AI is being applied in practice—and the unique risks it presents—requires a more nuanced approach. This article will examine where executives are directing their governance efforts, how organizations are safeguarding data in AI systems today, and the level of maturity of these governance programs.

With governance risk and compliance as the number one priority for AI initiatives this year, the purpose of this article is to understand the current state of AI Governance and what the focus is for governance this year. To achieve this, we surveyed our innovation advisory council members, which includes technology executives such as CIOs, CTOs, CISOs and other technology executive roles.

Key Takeaways

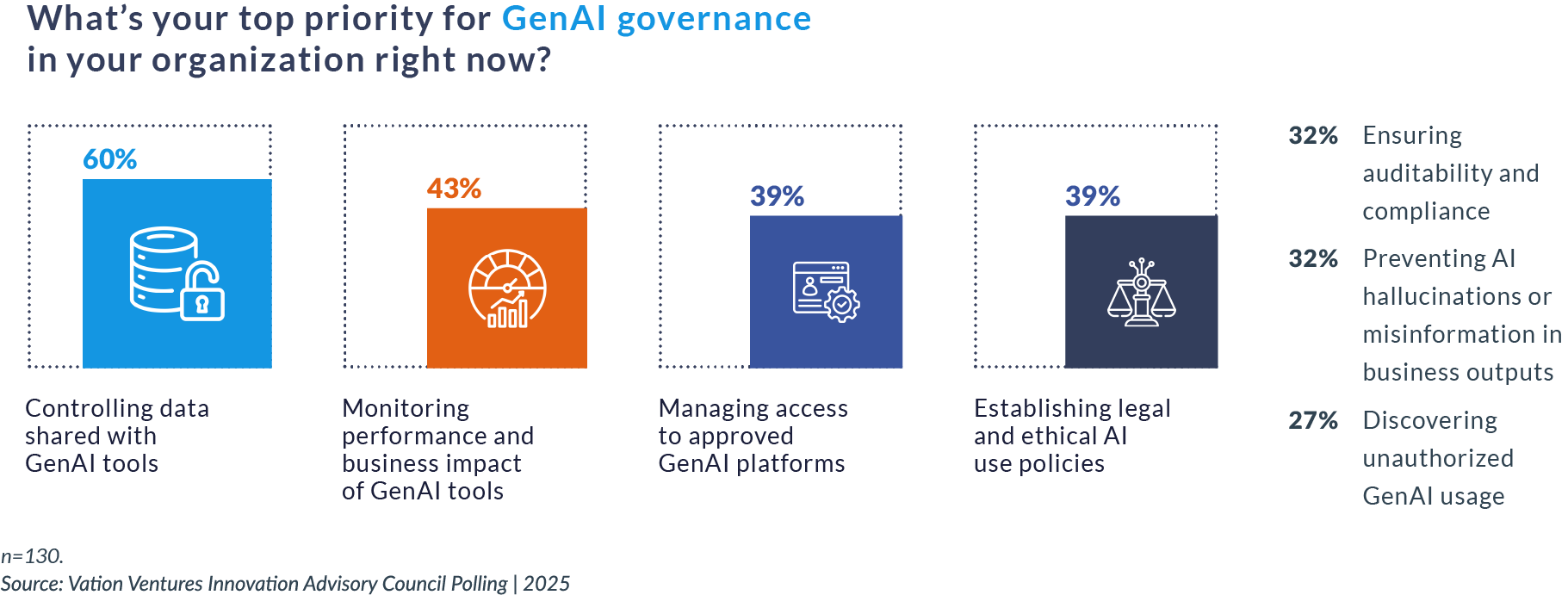

- Data control remains the paramount governance concern. Nearly 60% of executives identify managing data shared with GenAI tools as their top priority, reflecting widespread anxiety about sensitive information, intellectual property, and personally identifiable information being inadvertently exposed to large language models. This focus on preventing data leakage has become the foundation of most AI governance strategies as organizations balance innovation with the protection of competitive and regulated data.

- A dual approach of technology and training dominates current protection strategies. Organizations are primarily relying on approved GenAI platforms with built-in data controls combined with employee training and policies to manage AI risks. This reflects an understanding that governance is both a technical and human challenge, requiring sanctioned tools with embedded safeguards alongside workforce education to shape responsible behavior at scale. However, this approach requires constant evolution as AI tools advance faster than policies can adapt.

- AI governance remains largely immature despite its recognized importance. Only 8% of organizations have fully implemented governance frameworks, while 65% are either still in the planning stage or have only partially implemented them. This gap between awareness and execution stems from GenAI’s unprecedented complexity, including hard-to-measure risks such as AI hallucinations, its enterprise-wide scope spanning multiple departments, shadow AI adoption, and rapidly evolving regulations. Organizations are compelled to establish governance infrastructure, while AI systems are already operating at scale.

GenAI Governance: What Matters Most Right Now For Technology Executives

When asked about their top priorities for GenAI governance, executives’ responses clearly focused on the basics of control and oversight. Nearly 60% of respondents highlighted the need to manage data shared with GenAI tools, emphasizing the importance of protecting sensitive information as AI use continues to accelerate. Other key priorities include monitoring performance and business impact, controlling access to approved platforms, and establishing policies for the legal and ethical use of resources. These priorities reflect leaders’ efforts to strike a balance between innovation and responsibility. Less emphasized but still crucial are safeguards such as ensuring auditability and compliance, preventing AI hallucinations, and detecting unauthorized use, demonstrating that governance involves both strategic oversight and practical risk management as AI tools become integral to daily operations.

Controlling Data Shared with GenAI Tools

The most crucial governance priority for organizations is gaining control over the data that interacts with GenAI systems. This involves preventing the unauthorized ingestion of sensitive, regulated, or competitive data into large language models (LLMs). Governance should enforce strict technical controls, such as sandboxing or data masking, to stop employees from accidentally using internal sensitive data—like customer records or intellectual property—in prompts sent to external or unapproved GenAI tools, thereby preventing data leakage. Additionally, clear policies outlining what data can be used for training and interaction are crucial for ensuring compliance with global data privacy laws (e.g., GDPR, CCPA) and reducing legal risks related to copyright infringement or exposure of personally identifiable information (PII).

Monitoring Performance and Business Impact of GenAI Tools

A key goal for governance is to go beyond just deploying GenAI and focus on actively tracking its effectiveness, performance, and real-world business impact. Without clear metrics and ongoing oversight, organizations risk investing in tools that offer limited value or, worse, cause hidden inefficiencies or biases. This makes it essential to define key performance indicators (KPIs) to measure improvements in efficiency or cost savings. Continuous monitoring is crucial for detecting performance drift, where model outputs deteriorate over time due to changes in data or context, necessitating recalibration to maintain system reliability. Importantly, governance requires regular checks for algorithmic bias and other unintended effects to ensure the system produces fair and equitable business results for all user groups.

Managing Access to Approved GenAI Platforms

Controlling who can access specific GenAI tools and how they are used is essential for managing security and ethical risks. It requires a centralized governance system to prevent the spread of unvetted, shadow AI tools. Governance should include a formal vetting process to approve GenAI platforms, ensuring that only tools meeting strict security, compliance, and ethical standards are accessible to employees. This helps prevent shadow IT and unmanaged risks. Once approved, access should be managed through Role-Based Access Control (RBAC), limiting access based on the user’s role and the application’s sensitivity. High-risk use cases should be subject to stricter oversight and require human-in-the-loop interventions. Additionally, clear usage policies must be established and enforced, such as banning the use of GenAI for making final, unreviewed legal or medical decisions, and requiring all synthetic content to be clearly labeled for end-users.

Current Approaches to GenAI Data Protection

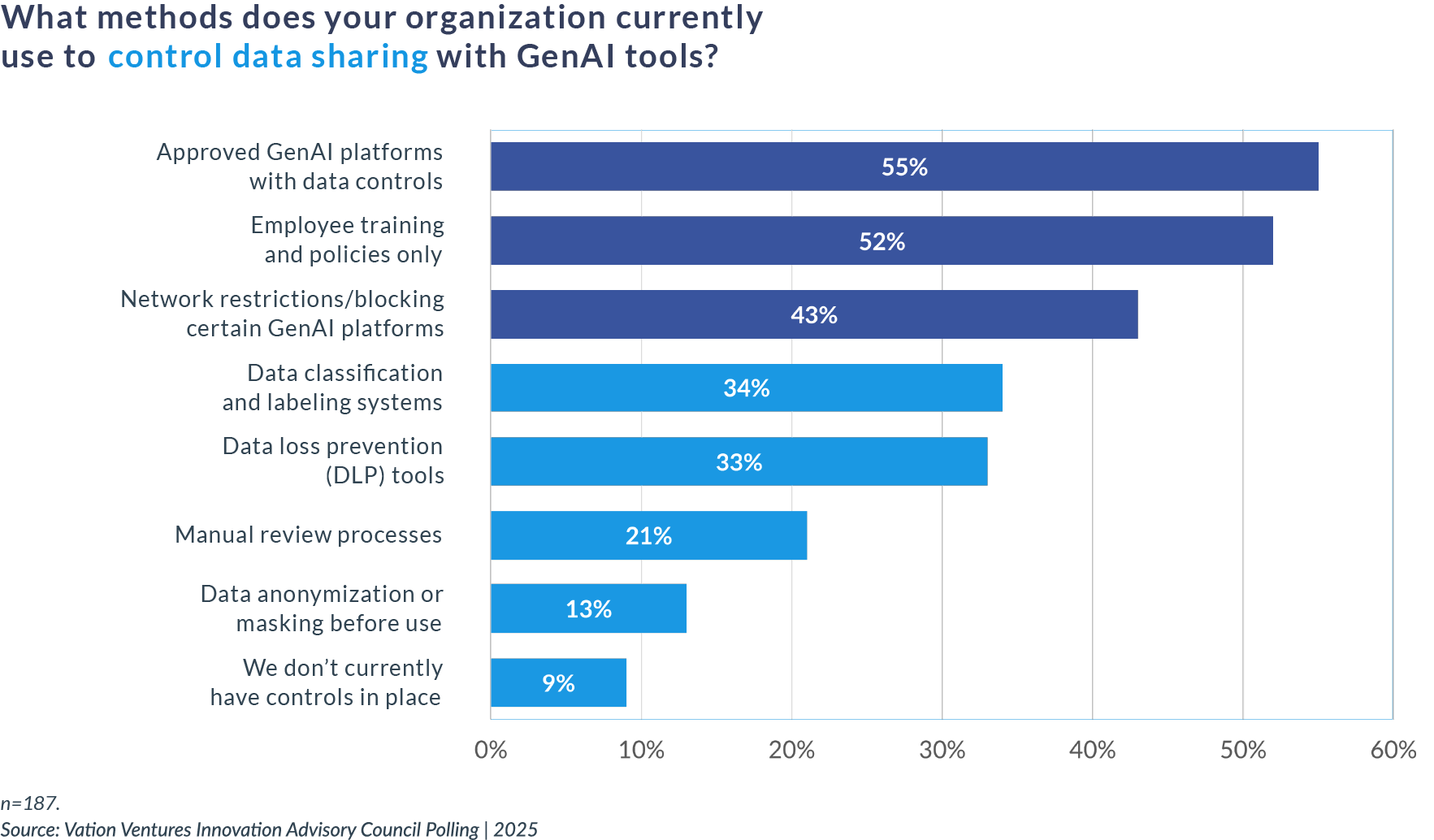

When it comes to controlling data shared with GenAI tools, organizations are relying on a broad mix of methods, reflecting both technical safeguards and human-driven approaches. The most common strategies include approved GenAI platforms with built-in data controls and employee training supported by policies, each cited by more than half of respondents. These choices underscore the dual emphasis on technology-enabled guardrails and workforce education as the primary lines of defense.

Other widely used measures include network restrictions, data classification systems, and data loss prevention tools, indicating that many organizations are implementing multiple controls to mitigate risk. Less common, though still notable, are manual review processes and data anonymization or masking before use. A small percentage of respondents reported having no controls in place, underscoring the uneven maturity across enterprises as they adapt governance practices to the realities of AI adoption.

Approved GenAI platforms with data controls

The most common method organizations use to manage data risks is limiting usage to approved GenAI platforms that come with embedded data controls. This approach reflects a shift toward formalizing the AI ecosystem inside the enterprise. Rather than leaving adoption to chance, leaders are curating a trusted set of platforms that can meet baseline standards for security, compliance, and monitoring. By doing so, they not only reduce exposure to data leakage but also create a foundation for scaling AI responsibly. The emphasis is on enabling innovation through safe, sanctioned channels rather than attempting to block or ban AI outright.

Employee Training and Policies

Close behind in adoption are training and policies that guide employees on the responsible use of AI. This underscores the recognition that governance is not only a technology problem but also a human one. Policies and awareness programs are crucial for shaping behavior at scale, particularly as employees experiment with GenAI tools in their daily workflows. Training efforts serve a dual purpose: they reduce unintentional misuse of sensitive data and they foster a culture of accountability around AI. The challenge, however, is that policies must evolve as quickly as the tools themselves; otherwise, they risk becoming a box-checking exercise rather than a meaningful safeguard.

Network Restrictions/blocking Certain GenAI platforms

A significant number of organizations are also turning to network restrictions or outright blocking of specific GenAI platforms. This is often seen as a defensive first step when formal governance programs are still in development. Blocking can reduce immediate risks, but it comes with trade-offs: employees may still find workarounds, and the organization risks stifling innovation if restrictions are too broad. Thoughtful leaders recognize that blocking should be a short-term tactic, not a long-term strategy. The ultimate goal is to transition from restriction to enablement by providing secure, approved tools that meet the business’s needs while keeping data protected.

From Planning to Practice: AI Governance Maturity

Despite widespread adoption of AI across enterprises, formal governance frameworks remain underdeveloped. Our research found that only 8% have fully implemented frameworks addressing risk management, compliance, and ethics. The majority occupy transitional states: 34% are partially implemented, 21% are primarily implemented, and 31% are still in the planning stage, while 6% haven’t begun implementation at all.

This distribution highlights a critical reality: while executives acknowledge the importance of risk management, compliance, and ethical considerations, many are still building the structures and processes needed to govern AI effectively.

This slow progress reflects the unprecedented complexity of governing Generative AI systems. Unlike traditional software, GenAI presents risks that are difficult to define or measure, creating entirely new information through dynamic interactions that require new controls, such as prompt tracking and output filtering. Phenomena such as confabulation, where AI confidently generates false information, pose specific risks in critical areas, including healthcare and legal services; however, measurement science for these risks remains underdeveloped.

The enterprise-wide scope of AI further complicates governance. What began as isolated experiments now touches cybersecurity, product design, customer service, and executive decision-making. Organizations struggle with “shadow AI” as departments independently adopt tools like ChatGPT or Copilot, while employees unknowingly use GenAI through embedded software components. Combined with complex upstream dependencies on third-party models and rapidly evolving regulations, such as the EU AI Act, comprehensive governance proves elusive.

Even those reporting that 29% of frameworks are mostly or entirely implemented must continue to adapt. Structural challenges, including talent shortages, role overlap between Chief AI Officers and existing executives, and the use of legacy governance tools, mean that organizations are essentially building regulatory infrastructure. At the same time, AI systems already operate at scale, requiring constant iteration as the technology evolves.

Conclusion

The path forward for AI governance requires technology leaders to embrace a fundamentally different mindset. The data reveals an apparent disconnect: while 42% of executives cite governance as their top AI priority, only 8% have fully implemented frameworks to address it. This gap is not a failure of intent but a reflection of GenAI’s unprecedented complexity. Traditional governance models, designed for static software systems, cannot adequately address the dynamic and generative nature of AI. The most successful organizations will be those that move beyond viewing governance as a compliance checkbox and instead treat it as an enabler of responsible innovation. This means investing in approved platforms with robust controls, continuously educating teams on responsible AI use, and building adaptive frameworks that evolve alongside the technology itself. The challenge is not to lock down AI adoption through restrictive policies, but to create guardrails that protect your organization while empowering teams to harness AI’s transformative potential. As shadow AI proliferates and regulations tighten, the organizations that act now to formalize their governance approach will be best positioned to scale AI safely and sustainably across the enterprise.

Are you seeking to establish an AI governance framework tailored to your organization’s unique risk profile and compliance needs? Our team of experts helps clients develop comprehensive AI governance strategies that strike a balance between innovation and responsible oversight, addressing data protection, ethical use, and regulatory compliance. We help technology leaders move from planning to implementation with frameworks that deliver measurable risk mitigation and business value. Explore how we can help here.

About Our Respondents: This research draws on responses from our Innovation Advisory Council, which comprises technology executives, including CIOs, CTOs, CISOs, and other senior technology leaders across various industries. These council members meet regularly to share insights on emerging technologies, governance best practices, and implementation strategies specific to AI adoption and risk management. All data was collected through anonymous polling conducted during Q2 2025.